Home · Book Reports · 2024 · Game AI Pro 1

- Author :: Multiple

- Publication Year :: 2014

- Read Date :: 2024-01-27

- Source :: Game_AI_Pro_(2013).pdf

designates my notes. / designates important. / designates very important.

Thoughts

Chapter 2 talks about neurology. This is used as an analogy of how signals propagate within the body and how they can propagate within you game’s AI. Interesting to note that the speed of signals within the body - your reflexes - are actually WAY slower (compared to for example internet speeds) than you might think.

The book touches on many topics but doesn’t go deeply into any. That isn’t a bad thing. It is actually a good introduction to many AI concepts and can act as a sort of idea catalyst to give you a jumping off point in which AI branch you want to learn more about (or which AI might work in your game).

Some of the info is a little dated - they talk about hundreds of pipes in a GPU as a big deal - but nothing bad and nothing that makes the concepts any less relevant.

Table of Contents

- 01: What is Game AI?

- 02: Informing Game AI through the Study of Neurology

- 03: Advanced Randomness Techniques for Game AI Gaussian Randomness, Filtered Randomness, and Perlin Noise

- 04: Behavior Selection Algorithms - An Overview

- 05: Structural Architecture - Common Tricks of the Trade

- 06: The Behavior Tree Starter Kit

- 07: Real-World Behavior Trees in Script

- 08: Simulating Behavior Trees A Behavior Tree/Planner Hybrid Approach

- 09: An Introduction to Utility Theory

- 10: Building Utility Decisions into Your Existing Behavior

- 11: Reactivity and Deliberation in Decision-Making Systems

- 12: Exploring HTN Planners through Example

- 13: Hierarchical Plan-Space Planning for Multi-unit Combat Maneuvers

- 14: Phenomenal AI Level-of-Detail Control with the LOD Trader

- 15: Runtime Compiled C++ for Rapid AI Development

- 16: Plumbing the Forbidden Depths Scripting and AI

- 17: Pathfinding Architecture Optimizations

- 18: Choosing a Search Space Representation

- 19: Creating High-Order Navigation Meshes through Iterative Wavefront Edge Expansions

- 20: Precomputed Pathfinding for Large and Detailed Worlds on MMO Servers

- 21: Techniques for Formation Movement Using Steering Circles

- 22: Collision Avoidance for Preplanned Locomotion

- 23: Crowd Pathfinding and Steering Using Flow Field Tiles

- 24: Efficient Crowd Simulation for Mobile Games

- 25: Animation-Driven Locomotion with Locomotion Planning

- 26: Tactical Position Selection An Architecture and Query Language

- 27: Tactical Pathfinding on a NavMesh

- 28: Beyond the Kung-Fu Circle A Flexible System for Managing NPC Attacks

- 29: Hierarchical AI for Multiplayer Bots in Killzone 3

- 30: Using Neural Networks to Control Agent Threat Response

- 31: Crytek’s Target Tracks Perception System

- 32: How to Catch a Ninja NPC Awareness in a 2D Stealth Platformer

- 33: Asking the Environment Smart Questions

- 34: A Simple and Robust Knowledge Representation System

- 35: A Simple and Practical Social Dynamics System

- 36: Breathing Life into Your Background Characters

- 37: Alibi Generation Fooling All the Players All the Time

- 38: An Architecture Overview for AI in Racing Games

- 39: Representing and Driving a Race Track for AI Controlled Vehicles

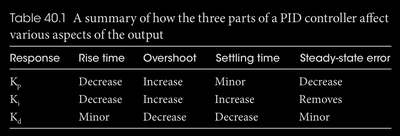

- 40: Racing Vehicle Control Systems using PID Controllers

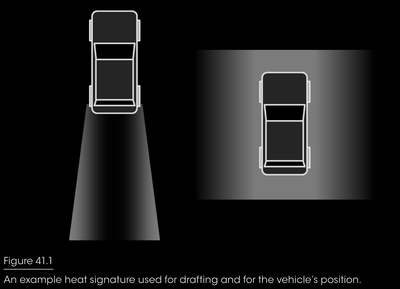

- 41: The Heat Vision System for Racing AI A Novel Way to Determine Optimal Track Positioning

- 42: A Rubber-Banding System for Gameplay and Race Management

- 43: An Architecture for Character-Rich Social Simulation

- 44: A Control-Based Architecture for Animal Behavior

- 45: Introduction to GPGPU for AI

- 46: Creating Dynamic Soundscapes Using an Artificial Sound Designer

- 47: Tips and Tricks for a Robust Third-Person Camera System

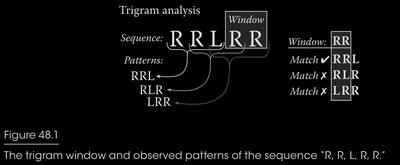

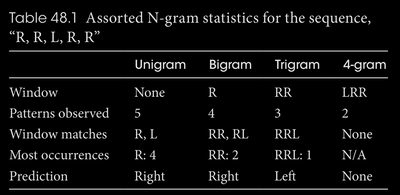

- 48: Implementing N-Grams for Player Prediction, Procedural Generation, and Stylized AI

Part 1 - General Wisdom

Part 2 - Architecture

Part 3 - Movement and Pathfinding

Part 4 - Strategy and Tactics

Part 5 - Agent Awareness and Knowledge Representation

Part 6 - Racing

Part 7 - Odds and Ends

Part 1 - General Wisdom

· Chapter 1 - What is Game AI?

- By: Kevin Dill

page 007:

-

Brian Kernighan, codeveloper of Unix and the C programming language, is believed to have said, “Debugging is twice as hard as writing the code in the first place. Therefore, if you write the code as cleverly as possible, you are, by definition, not smart enough to debug it”

-

Apparently the scenario AI for the original Warcraft would simply wait a fixed amount of time, and then start spawning a wave of units to attack you. It would spawn these units at the edge of the gray fog—which is to say, just outside of the visibility range of your units. It would continue spawning units until your defenses were nearly overwhelmed—and then it would stop, leaving you to mop up those units that remained and allowing you to win the fight.

-

This approach seems to cross the line fairly cleanly into the realm of “cheating.” The AI doesn’t have to worry about building buildings, or saving money, or recruiting units—it just spawns whatever it needs. On the other hand, think about the experience that results. No matter how good or bad you are at the game, it will create an epic battle for you — one which pushes you to the absolute limit of your ability, but one in which you will ultimately, against all odds, be victorious.

· Chapter 2 - Informing Game AI through the Study of Neurology

- By: Brett Laming

page 018:

-

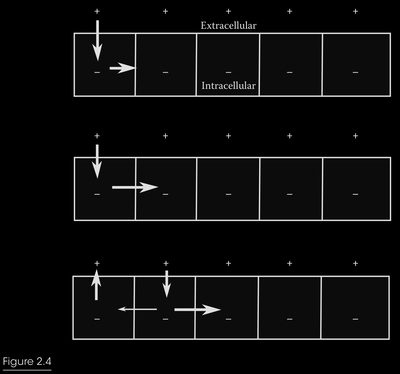

An illustration of action potential propagation through nerve fibers over time. The resulting swing in repolarization and the delay in membrane channels reopening prevent previously excited sites from firing again.

-

Schmitt trigger and hysteresis

page 019:

- Notice how a potentially hard problem, the transmission of action potentials, can be better conceptualized by compartmentalizing the space (Figure 2.4). At this stage, each com- partment represents just a local area, and the comprehension is immediately simplified. Now consider a tactical warfare simulation with a realistic AI communication system. Rather than working out a route for each agent “A” to get a message to another agent “B,” it is easier to imagine all such agents on a compartmentalized grid, posting messages to neighboring agents who then carry the transmission that way. In doing so, we get some nice extras for free: the potential for spies, utterance signaling, realistic transmission delays, and bigger signaling distances covered by nonverbal gestures or field phones. In either case, by thinking about the problem as a different representation, we have instantly simplified the procedure and got some realistic wins as well!

page 022:

- Hence the Rosenblatt perceptron can be specified as follows: For a vector of potential inputs $v_i = [-1, i_1, \dots, i_j]$ and the vector of weights $v_w = [\omega_0, \omega_1, \dots, \omega_j]$ where $\omega_0$ represents the bias weight, but always for a constant input $(i_0 = -1)$:

$$y = v_i . v_w > 0 \space then \space 1 \space else \space 0$$

-

Rosenblatt’s perceptron has two phases, a learning pass and a prediction pass. In the learning pass, a definition of $v_i$ is passed in as well as a desired output, $y_{ideal}$ , normalized to a range of $0 \dots 1$. On each presentation of $v_i$ and $y_{ideal}$ a learning rule is applied in a similar form to Hebb’s.

-

Here, each weight is affected by the difference in overall output and expected output $y_{ideal}$ multiplied up by contributory input and learning rate.

$$\omega_{i,t+1} = \omega_i + \mu(y_{ideal} - y)i_i$$

- So by presenting a series of these vectors and desired output, it is possible to train this artificial neuron to start trying to give new output in the absence of untrained conditions by just applying the output calculation without training.

page 023:

- The perceptron is essentially a Boolean classifier.

For example, here is a utility equation from a recent talk [Mark 10]:

$$coverChance = 0.2+reloadNeed \times 1.0+healNeed \times 1.5+threatRating \times 1.3$$

- Now, these values probably took some degree of trial and error to arrive at and probably had to be normalized into sensible ranges. But consider this:

$$coverChance = 1 \times w_0 + reloadNeed \times w_1+healNeed \times w_2+threatRating \times w_3$$

-

If we present the player with a number of scenarios and ask them whether they would take cover, we can easily get values that, after enough questions, are still easily accessible in meaning and therefore can make better initial guesses at the equation that might want to drive our AI.

-

A final nice property is that we don’t necessarily need to answer the false side of the equation if we don’t want to; we could just supply random values to represent false. Imagine each time the player goes into cover we measure these values and return true, following it by random values that return false. Even if we happen to get a lucky random true cover condition, over time it will just represent noise. If we then clamp all weights to sensible ranges, potentially found by training in test circumstances, we now have a quick to compute run-time predictor of what determines when the player may think of going into cover!

page 026:

- We should always bear in mind that some of the quickest processing at 200 ms or so is still 6 frames at 30 frames per second. This means that distributing AI planning over frames is not only viable, it may be more biologically plausible.

· Chapter 3 - Advanced Randomness Techniques for Game AI Gaussian Randomness, Filtered Randomness, and Perlin Noise

- By: Steve Rabin, Jay Goldblatt, and Fernando Silva

page 030:

-

Normal distributions (also known as Gaussian distributions or bell curves)

-

There is randomness in these distributions, but they are not uniformly random. For example, the chance of a man growing to be 6 feet tall is not the same as the chance of him growing to a final height of 5 feet tall or 7 feet tall. If the chance were the same, then the distribution would be uniformly random.

-

Some random things in life do show a uniform distribution, such as the chance of giving birth to a boy or a girl. However, the large majority of distributions in life are closer to a normal distribution than a uniform distribution. But why?

-

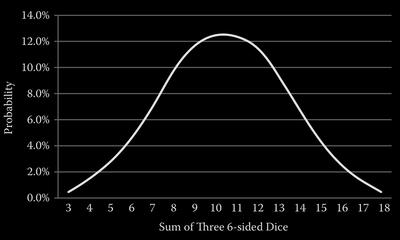

The answer is quite simple and is explained by the central limit theorem. Basically, when many random variables are added together, the resulting sum will follow a normal distribution. This can be seen when you roll three 6-sided dice. While there is a uniform chance of a single die landing on any face, the chance of rolling three dice and their sum equaling the maximum of 18 is not uniform with regard to other outcomes. For example, the odds of three dice adding up to 18 is 0.5% while the odds of three dice adding up to 10 is 12.5%. Figure 3.1 shows that the sum of rolling three dice actually follows a normal distribution.

page 031:

- The probability of the sum of rolling three six-sided dice will follow a normal distribution, even though the outcomes of any single die have a uniform distribution. This is due to the central limit theorem.

page 032:

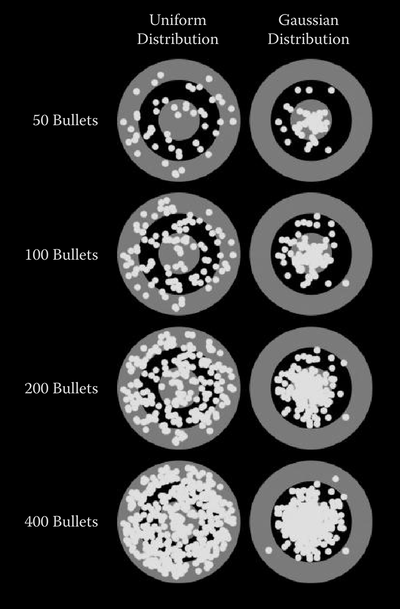

- Figure 3.2 shows both uniform and Gaussian bullet spreads on a target (generated from the sample demo on the book’s website). It should be evident that the Gaussian one on the right looks much more realistic, but how is it generated? The trick is to use polar coordinates and a mix of both uniform and Gaussian randomness. First, a random uniform angle is generated from 0 to 360°. This value is used as a polar coordinate to determine an angle around the center of the target. It is important for this value to be uniform because there should be an equal chance of any angle. Second, a random Gaussian number is generated to determine the distance from the center of the target. By combining the random uniform angle and the random Gaussian distance from the center, you can recreate a very realistic bullet spread.

page 034:

-

Randomness is too random (for many uses in games).

-

small runs of randomness don’t look random

page 036:

- some rules to make random number generation “feel” less random. Honestly these feel clunky and heavy handed. I would do something like change the weights of the probabilities per roll. Start with a H:T 50:50 coin flip. Heads. Second flip at 40:60. Heads. Third flip at 30:70. Continue skewing the weight until the result is tails. Then skew the other way.

page 037:

- The open source program ENT will run a variety of metrics to evaluate the randomness, so it would be advisable to run these benchmarks if you design your own rules… https://github.com/Fourmilab/ent_random_sequence_tester

page 040:

-

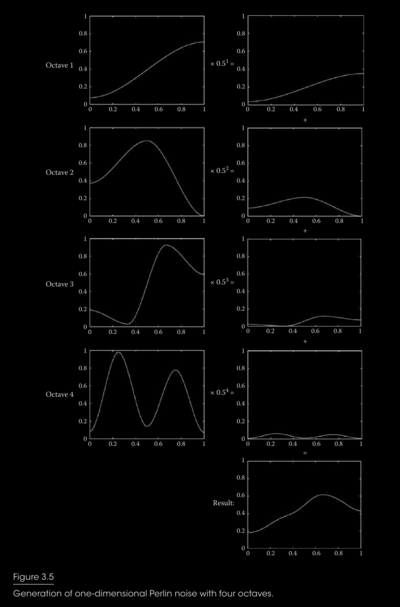

In one-dimension, Perlin noise is constructed by first deciding how many octaves to use. Each octave contributes to the signal detail at a particular scale, with higher octaves adding more fine-grained detail. Each octave is computed individually and then they are added to each other to produce the final signal. Figure 3.5 shows one-dimensional Perlin noise, constructed with four octaves.

-

In order to explain how each octave signal is produced, let’s start with the first one. The first octave is computed by starting and ending the interval with two different uniform random numbers, in the range [0, 1]. The signal in the middle is computed by applying a mathematical function that interpolates between the two. The ideal function to use is the S-curve function $6t^5 - 15t^4 + 10t^3$ because it has many nice mathematical properties, such as being smooth in the first and second derivatives [Perlin 02]. This is desirable so that the signal contained within higher octaves is smooth.

-

For the second octave, we choose three uniform random numbers, place them equidistant from each other, and then interpolate between them using our sigmoid function. Similarly, for the third octave, we choose five uniform random numbers, place them equidistant from each other, and then interpolate between them. The number of uniform random numbers for a given octave is equal to $2^{n−1} + 1$. Figure 3.5 shows four octaves with randomly chosen numbers within each octave.

-

Once we have the octaves, the next step is to scale each octave with an amplitude. This will cause the higher octaves to progressively contribute to the fine-grained variance in the final signal. Starting with the first octave, we multiply the signal by an amplitude of 0.5, as shown in Figure 3.5. The second octave is multiplied by an amplitude of 0.25, and the third octave is multiplied by an amplitude of 0.125, and so on. The formula for the amplitude at a given octave is $p_i$ , where $p$ is the persistence value and $i$ is the octave (our example used a persistence value of 0.5). The persistence value will control how much influence higher octaves have, with high values of persistence giving more weight to higher octaves ( producing more high-frequency noise in the final signal).

-

Now that the octaves have been appropriately scaled, we can add them together to get our final one-dimensional Perlin noise signal, as shown at the bottom right of Figure 3.5. While this is all fine and good, it is important to realize that for the purposes of game AI, you are not going to compute and store the entire final signal, since there is no need to have the whole thing at once. Instead, given a particular time along the signal, in the range $[0, 1]$ along the x-axis, you’ll just compute that particular point as needed for your simulation. So if you want the point in the middle of the final signal, you would compute the individual signal in each octave at time 0.5, scale each octave value with their correct amplitude, and add them together to get a single value. You can then run your simulation at any rate by requesting the next point at 0.500001, 0.51, or 0.6, for example.

page 042:

-

Controlling Perlin Noise

-

As alluded to in the previous section, there are several controls that will allow you to customize the randomness of the noise. The following list is a summary.

-

Number of octaves: Lower octaves offer larger swings in the signal while higher octaves offer more fine-grained noise. This can be randomized within a population as well, so that some individuals have more octaves than others when generating a particular behavior trait.

-

Range of octaves: You can have any range, for example octaves 4 through 8. You do not have to start with octave 1. Again, the ranges can be randomized within a population.

-

Amplitude at each octave: The choice of amplitude at each octave can be used to control the final signal. The higher the amplitude, the more that octave will influence the final signal. Simply ensure that the sum of amplitudes across all octaves does not exceed 1.0 if you don’t want the final signal to exceed 1.0.

-

Choice of interpolation: The S-curve function is commonly used in Perlin noise, with original Perlin noise using $3t^2 - 2t^3$ [Perlin 85] and improved Perlin noise using $6t^5 - 15t^4 + 10t^3$ (smooth in the second derivative) [Perlin 02]. However, you might be able to get other interesting effects by choosing a different formula [Komppa 10].

Part 2 - Architecture

· Chapter 4 - Behavior Selection Algorithms - An Overview

- Michael Dawe, Steve Gargolinski, Luke Dicken, Troy Humphreys, and Dave Mark

page 048:

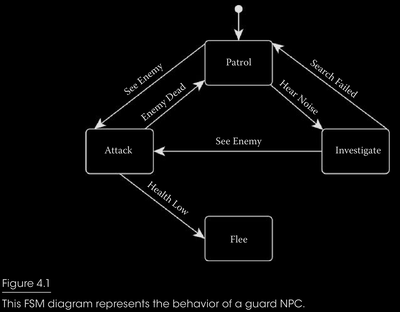

- Finite-State Machines

page 049:

class FSMState

{

virtual void onEnter();

virtual void onUpdate();

virtual void onExit();

list<FSMTransition> transitions;

};

class FSMTransition

{

virtual bool isValid();

virtual FSMState* getNextState();

virtual void onTransition();

}

class FiniteStateMachine

{

void update();

list<FSMState> states;

FSMState* initialState;

FSMState* activeState;

}

-

The FiniteStateMachine class contains a list of all states in our FSM, as well as the initial state and the current active state. It also contains the central update() function, which is called each tick and is responsible for running our behavioral algorithm as follows:

-

Call isValid() on each transition in activeState.transtitions until isValid() returns true or there are no more transitions.

-

If a valid transition is found, then:

-

Call activeState.onExit()

-

Set activeState to validTransition.getNextState()

-

Call activeState.onEnter()

-

If a valid transition is not found, then call activeState.onUpdate() With this structure in place, it’s a matter of setting up transitions and filling out the onEnter(), onUpdate(), onExit(), and onTransition() functions to produce the desired AI behavior.

page 050:

-

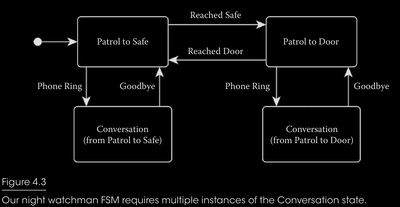

Hierarchical Finite-State Machines

-

Adding the second, third, or fourth state to an NPC’s FSM is usually structurally trivial, as all that’s needed is to hook up transitions to the few existing required states. However, if you’re nearing the end of development and your FSM is already complicated with 10, 20, or 30 existing states, then fitting your new state into the existing structure can be extremely difficult and error-prone.

-

You want to add a conversation state, but want to return to the patrol “direction” after the conversation? You’ll need 2 distinct states.

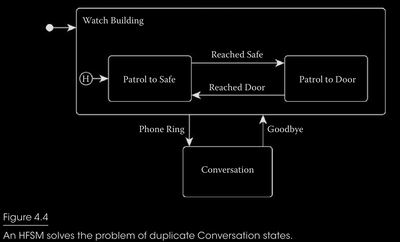

- if we nest our two Patrol states into a state machine called Watch Building, then we can get by with just one Conversation state

-

The reason this works is that the HFSM structure adds additional hysteresis that isn’t present in an FSM. With a standard FSM, we can always assume that the state machine starts off in its initial state, but this is not the case with a nested state machine in an HFSM. Note the circled “H” in Figure 4.4, which points to the “history state.” The first time we enter the nested Watch Building state machine, the history state indicates the initial state, but from then on it indicates the most recent active state of that state machine.

-

Our example HFSM starts out in Watch Building (indicated by the solid circle and arrow as before), which chooses Patrol to Safe as the initial state. If our NPC reaches the safe and transitions into Patrol to Door, then the history state switches to Patrol to Door. If the NPC’s phone rings at this point, then our HFSM exits Patrol to Door and Watch Building, transitioning to the Conversation state. After Conversation ends, the HFSM will transition back to Watch Building which resumes in Patrol to Door (the history state), not Patrol to Safe (the initial state).

-

For a solid detailed implementation, check out Section 5.3.9 in the book Artificial Intelligence for Games by Ian Millington and John Funge [Millington and Funge 09].

page 052:

-

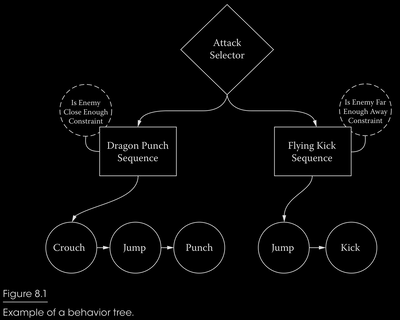

The algorithm to execute a behavior tree is as follows:

-

Make root node the current node

-

While current node exists,

-

Run current node’s precondition

-

If precondition returns true,

- Add node to execute list

- Make node’s child current node

-

Else,

- Make node’s sibling current node

-

Run all behaviors on the execute list

page 053:

-

Since trees are stateless, the algorithm doesn’t need to remember what behaviors were previously running in order to determine what behaviors should execute on a given frame. Further, behaviors can (and should) be written to be completely unaware of each other, so adding or removing behaviors from a character’s behavior tree do not affect the running of the rest of the tree. This alleviates the problem common with FSMs, where every state must know the transition criteria for every other state.

-

Extensibility is also an advantage with behavior trees. It is easy to start from the base algorithm as described and start adding extra functionality. Common additions are behavior on_start/on_finish functions that are run the first time a behavior begins and when it completes. Different behavior selectors can be implemented as well. For example, a parent behavior could specify that instead of choosing one of its children to run, each of its children should be run once in turn, or that one of its children should be chosen randomly to run. Indeed, a child behavior could be run based on a utility system-type selector (see below) if desired. Preconditions can be written to fire in response to events as well, giving the tree flexibility to respond to agent stimuli. Another popular extension is to specify individual behaviors as nonexclusive, meaning that if their precondition is run, the behavior tree should keep checking siblings at that level.

-

Since behaviors themselves are stateless, care must be taken when creating behaviors that appear to apply memory. For example, imagine a citizen running away from a battle. Once well away from the area, the “run away” behavior may stop executing, and the highest-priority behavior that takes over could take the citizen back into the combat area, making the citizen continually loop between two behaviors. While steps can be taken to prevent this sort of problem, traditional planners can tend to deal with the situation more easily.

page 054:

- A utility-based system measures, weighs, combines, rates, ranks, and sorts out many considerations in order to decide the preferability of potential actions.

page 055:

-

Another caveat to using utility-based architecture is that all the subtlety and responsiveness that you gain often comes at a price. While the core architecture is often relatively simple to set up, and new behaviors can be added simply, they can be somewhat challenging to tune. Rarely does a behavior sit in isolation in a utility-based system. Instead, it is added to the pile of all the other potential behaviors with the idea that the associated mathematical models will encourage the appropriate behaviors to “bubble to the top.” The trick is to juggle all the models to encourage the most reasonable behaviors to shine when it is most appropriate. This is often more art than science. As with art, however, the results that are produced are often far more engaging than those generated by using simple science alone.

-

For more on utility-based systems, see the article in this book, An Introduction to Utility Theory [Graham 13] and the book Behavioral Mathematics for Game AI [Mark 09].

-

Goal-Oriented Action Planning (GOAP) is a technique pioneered by Monolith’s Jeff Orkin for the game F.E.A.R. in 2005, and has been used in a number of games since, most recently for titles such as Just Cause 2 and Deus Ex: Human Revolution. GOAP is derived from the Stanford Research Institute Problem Solver (STRIPS) approach to AI which was first developed in the early 1970s. In general terms, STRIPS (and GOAP) allows an AI system to create its own approaches to solving problems by being provided with a description of how the game world works—that is, a list of the actions that are possible, the requirements before each action can be used (called “preconditions”), and the effects of the action.

page 056:

-

Backwards chaining search works in the following manner:

-

Add the goal to the outstanding facts list

-

For each outstanding fact

-

Remove this outstanding fact

-

Find the actions that have the fact as an effect

-

If the precondition of the action is satisfied,

- Add the action to the plan,

- Work backwards to add the now-supported action chain to the plan

-

Otherwise,

- Add the preconditions of the action as outstanding facts

page 057:

- hierarchical task networks (HTN)

page 058:

-

As opposed to backward planners like GOAP, which start with a desired world state and move backwards until it reaches the current state world state, HTN is a forward planner, meaning that it will start with the current world state and work towards a desired solution.

-

The following pseudocode shows how a plan is built.

-

Add the root compound task to our decomposing list

-

For each task in our decomposing list

-

Remove task

-

If task is compound

- Find method in compound task that is satisfied by the current world state

- If a method is found, add method’s tasks to the decomposing list

- If not, restore planner to the state before the last decomposed task

-

If task is primitive

- Apply task’s effects to the current world state

- Add task to the final plan list

-

HTN planners start with a very high-level root task and continuously decompose it into smaller and smaller tasks.

· Chapter 5 - Structural Architecture - Common Tricks of the Trade

- Kevin Dill

page 064:

-

because of increasing complexity as you add more configuration, break the decision making up hierarchically. That is, have a high-level reasoner that makes the big, overarching decisions, and then one or more lower-level reasoners that handle implementation of the higher-level reasoners’ decisions.

-

The advantage here is that the complexity of AI configuration scales worse than linearly on the number of options in a particular reasoner. To give a sense of the relevance, imagine that the cost of configuring the AI is $O(n^2)$ on the number of options (as it is for FSMs). If we have 25 options, then the cost of configuring the AI is on the order of 252 = 625. On the other hand, if we have five reasoners, each with five options, then the cost of configuring the AI is only 5 × (52) = 125.

page 065:

- Option stacks allow us to push a new, high priority option on top of the stack, suspending the currently executing option but retaining its internal state. When the high priority option completes execution, it will pop itself back off of the stack, and the previously executing option will resume as if nothing had ever happened. There are a myriad of uses for option stacks, and they can often be several levels deep. For example, a high-level strategic reasoner might have decided to send a unit to attack a distant enemy outpost. Along the way, that unit could be ambushed—in which case, it might push a React to Ambush option on top of its option stack. While responding to the ambush, one of the characters in the unit might notice that a live grenade has just been thrown at its feet. That character might then push an Avoid Grenade option on top of React to Ambush. Once the grenade has gone off (assuming the character lives) it can pop Avoid Grenade off the stack, and React to Ambush will resume. Once the enemy ambush is over, it will be popped as well, and the original Attack option will resume.

page 066:

-

One handy trick is to use option stacks to handle your hit reaction. If a character is hit by an enemy attack (e.g., a bullet), we typically want them to play a visible reaction. We also want the character to stop whatever it was doing while it reacts. For instance, if an enemy is firing their weapon when we hit them, they should not fire any shots while the hit reaction plays. It just looks wrong if they do. Thus, we push an Is Hit option onto the option stack, which suspends all previously running options while the reaction plays, and then pop it back off when the reaction is done.

-

In the academic AI community, blackboard architectures typically refer to a specific approach in which multiple reasoners propose potential solutions (or partial solutions) to a problem, and then share that information on a blackboard [Wikipedia 12, Isla et al. 02]. Within the game community, however, the term is often used simply to refer to a shared memory space which various AI components can use to store knowledge that may be of use to more than one of them, or may be needed multiple times.

-

Line-of-sight and path are examples that could be placed on a blackboard.

page 067:

-

One trick is to put the intelligence in the world, rather than in the character. This technique was popularized by The Sims, though earlier examples exist. In The Sims (and its sequels), objects in the world not only advertise the benefits that they offer (for example, a TV might advertise that it’s entertaining, or a bed might advertise that you can rest there), they also contain information about how to go about performing the associated actions [Forbus et al. 01].

-

Another advantage of this approach is that it greatly decreases the cost of expansion packs. In the Zoo Tycoon 2 franchise, for example, every other expansion pack was “ content only.” Because much of the intelligence was built into the objects, we could create new objects that would be used by existing animals, and even entirely new animals, without having to make any changes to the source code.

· Chapter 6 - The Behavior Tree Starter Kit

- By: Alex J. Champandard and Philip Dunstan

· Chapter 7 - Real-World Behavior Trees in Script

- By: Michael Dawe

process_behavior_node(node)

if (node.precondition returns true) {

node.action()

if (node.child exists)

process_behavior_node(node.child)

} else {

if (node.sibling exists)

process_behavior_node(node.sibling)

}

· Chapter 8 - Simulating Behavior Trees A Behavior Tree/Planner Hybrid Approach

- By: Daniel Hilburn

page 100:

page 101:

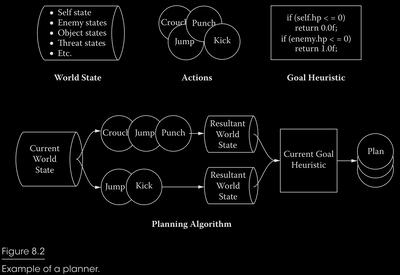

- As you can see, planners are great at managing what an AI should do. They allow designers to specify high-level goals for the AI by evaluating world states in the planner’s heuristic, rather than trying design specific behaviors for specific situations. Planners are able to do this by keeping a very strict separation between what an AI does (actions) and what the AI should do (heuristics). This also makes the AI more flexible and durable in the face of design changes. If the jump action gets cut because the team didn’t have time to polish the animations, just remove it. The AI will still create the best possible plan for its current world state. If the kick action suddenly also sends out a shockwave, you only need to add that to the kick action’s result description. You don’t need to change what the AI should do just because you changed what it does.

page 102:

-

While the flexibility of planners is a great strength, it can also be a great weakness. Often, designers will want to have more control over the sequences of actions that an AI can perform. While it is cool that your AI can create a jump kick plan on its own, it could also create a sequence of 27 consecutive jumps. This breaks the illusion of intelligence our AI should produce

-

This is the classic tradeoff that AI designers and programmers have to deal with constantly: the choice between the fully designed (brittle) AI that behavior trees provide and the fully autonomous (unpredictable) AI that planners provide.

page 110:

- Another wrinkle that arose was the idea of mistakes. It isn’t realistic for the Jedi to always defeat their enemies; they should sometimes fail.

Rather than add these special cases to each Action, we added a special Action called a FakeSim. The FakeSim Action is a special type of Composite Action called a decorator, which wraps another Action to add extra functionality to it. The FakeSim was responsible for adding incorrect information to the wrapped Action’s simulation step by modifying the world state directly. For example, there are some enemies that have a shield which makes them invulnerable to lightsaber attacks. If we want a Jedi to attack the enemy to demonstrate that the enemy is invulnerable while the shield is up, we can wrap the SwingSaber Action with a FakeSim Decorator which lowers the victim’s shield during the simulation step. Then, the SwingSaber simulation will think that the Jedi can damage the enemy and give it a good simulation result. This would allow SwingSaber to be chosen, even though it won’t actually be beneficial.

· Chapter 9 - An Introduction to Utility Theory

- By: David “Rez” Graham

page 114:

-

It’s important to note that utility is not the same as value. Value is a measurable quantity (such as the prices above). Utility measures how much we desire something. This can change based on personality or the context of the situation.

-

using normalized scores (values that go from 0–1) provide a reasonable starting point.

-

It’s important to note that any value range will work, as long as there is consistency across the different variables. If an AI agent scores an action with a value of 15, you should know immediately what that means in the context of the whole system. For instance, does that 15 mean 15 out of 25 or 15%?

-

The key to decision making using utility-based AI is to calculate a utility score (sometimes called a weight) for every action the AI agent can take and then choose the action with the highest score.

page 115:

- The most common technique is to multiply the utility score by the probability of each possible outcome and sum up these weighted scores. This will give you the expected utility of the action. This can be expressed mathematically with Equation 9.1.

$$EU = \sum_{i=1}^n D_i P_i$$

-

$D$ is the desire for that outcome (i.e., the utility), and $P$ is the probability that the outcome will occur. This probability is normalized so that the sum of all the probabilities is 1. This is applied to every possible action that can be chosen, and the action with the highest expected utility is chosen. This is called the principle of maximum expected utility [Russell et al. 09].

-

For example, an enemy AI in an RPG attacking the player has two possible outcomes— either the AI hits the player or it misses. If the AI has an 85% chance to hit the player, and successfully hitting the player has a calculated utility score of 0.6, the adjusted utility would be 0.85 × 0.6 = 0.51. (Note that, in this case, missing the player has a utility of zero, so there’s no need to factor it in.) Taking this further, if this attack were to be compared to attacking with a different weapon, for example, with a 60% chance of hitting but a utility score of 0.9 if successful, the adjusted utility would be 0.60 × 0.9 = 0.54. Despite having a lesser chance of hitting, the second option provides a greater overall expected utility.

-

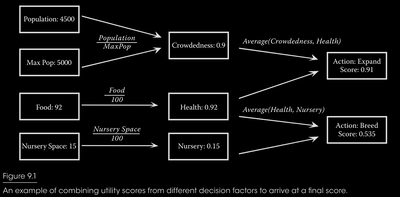

Let’s say we have an ant simulation game where the AI must determine whether to expand the colony or whether to breed. There are three different factors we want to consider for these decisions. The first is the overall crowdedness of the colony. If there are too many ants, we need to expand to make room for more. The second is the health of the colony, which we’ll say is based on how full the food stores are. Ant eggs need to be kept at a specific temperature; so there are specially built nurseries that house the eggs where they are taken care of. The amount of room in these nurseries is the third decision factor. These decision factors are based on game statistics that determine the score for each factor. The population and max population determine how many ants are in the colony and how many can exist based on the current colony size. The food stat represents how full the food stores are and is measured as a number from 0 to \100. The nursery space stat is also measured from 0 to 100 and represents how much space there is in the nursery. You can think of the last stats as percentages.

page 116:

- By averaging the normalized scores together, we can build an endless chain of combinations. This is a really powerful concept. Each decision factor is effectively isolated from every other decision factor. The only thing we know or care about is that the output will be a normalized score. We can easily add more game stat inputs, like the distance to an enemy ant colony. This could feed into a decision factor for deciding what kind of ants to breed. You can easily move decision factors around as well, combining them in different ways. If you wanted crowdedness to factor negatively into the decision for breeding, you could subtract crowdedness from 1.0 and average that into the score for breeding.

page 117:

-

In the ant example above, we chose to represent health as a linear ratio by dividing the current amount of food with the maximum amount of food. This probably isn’t a very realistic calculation since the colony shouldn’t care about food when the stores are mostly full. Some kind of quadratic curve is more of what we want.

-

The key to utility theory is to understand the relationship between the input and the output, and being able to describe that resulting curve [Mark 09]. This can be thought of as a conversion process, where you are converting one or more values from the game to utility. Coming up with the proper function is really more art than science and is usually where you’ll spend most of your time. There are a huge number of different formulas you could use to generate reasonable utility curves, but a few of them crop up often enough that they warrant some discussion.

-

A linear curve forms a straight line with a constant slope. The utility value is simply a multiplier of the input. Equation 9.2 shows the formula for calculating a normalized utility score for a given value and Figure 9.2 shows the resulting curve.

$$U =\frac{x}{m}$$

-

In Equation 9.2, $x$ is the input value and $m$ is the maximum value for that input. This is really just a normalization function, which is all we need for a linear output.

-

A quadratic function is one that forms a parabolic curve, causing it to start slow and then curve upwards very quickly. The simplest way to achieve this is to add an exponent to Equation 9.2. Equation 9.3 shows an example of this.

$$U =\empheqlparen\frac{x}{m}\empheqrparen^k$$

page 118:

- As the value of $k$ rises, the steepness of the curve will also rise. Since the equation normalizes the output, it will always converge on 0 and 1, so a large value of $k$ will have very little impact for low values of $x$. Figure 9.3 shows curves for three different values of $k$. It’s also possible to rotate the curve so that the effect is more urgent for low values of $x$ rather than high values. If you use an exponent between 0 and 1, the curve is effectively rotated, as shown in Figure 9.4.

page 119:

-

The logistic function is another common formula for creating utility curves. It’s one of several sigmoid functions that place the largest rate of change in the center of the input range, trailing off at both ends as they approach 0 and \1. The input range for the logistic function can be just about anything, but it is effectively limited to $[–10 \dots 10]$. There really isn’t much point generating a curve larger than that and the range is often clamped down even further. For example, when $x$ is 6, $EU$ will be 0.9975.

-

Equation 9.4 shows the formula for the logistic function and Figure 9.5 shows the resulting curve. Note the use of the constant $e$. This is Euler’s number—the base of the natural logarithm—which is approximately 2.718281828. This value can be adjusted to affect the shape of the curve. As the number goes up, the curve will sharpen and begin to resemble a square wave. As the number goes down, it will soften.

$$U=\frac{1}{1+e^-x}$$

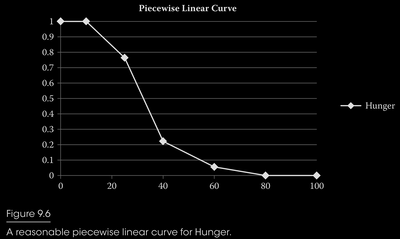

page 120:

-

A piecewise linear curve is just a custom-built curve. The idea is that you hand-tune a bunch of 2D points that represent the thresholds you want.

-

You might want hunger to NEVER be selectable when it is below a certain threshold.

-

There are many other types of custom curves. For example, the curve in Figure 9.6 could be changed so that the values from 15 to 60 are calculated with a quadratic curve, while the rest are linear. There’s no limit to the number of combinations you can have.

-

Once the utility has been calculated for each action, the next step is to choose one of those actions. There are a number of ways you can do this. The simplest is to just choose the highest scoring option. For some games, this may be exactly what you want. A chess AI should definitely choose the highest scoring move. A strategy game might do the same. For some games (like The Sims), choosing the absolute best action can feel very robotic due to the likelihood that the action will always be selected in that situation. Another solution is to use the utility scores as weight, and randomly choose one of the actions based on the weights. This can be accomplished by dividing each score with the sum of all scores to get the percentage chance that the action will be chosen. Then you generate a random number and select the action that number corresponds to. This tends to have the opposite problem, however. Your AI agents will behave reasonably well most of the time, but every now and then, they’ll choose something utterly stupid.

page 121:

-

You can get the best of both worlds by taking a subset of the highest scoring actions and choosing one of those with a weighted random. This can either be a tuned value, such as choosing from among the top five scoring actions, or it can be percentile based where you take the highest score and also consider things that scored within, say, 10% of it.

-

there could also be times when some set of actions are just completely inappropriate. You may not even want to score them. For example, say you’re making an FPS and have a guard AI. You might have some set of actions for him to consider, like getting some coffee, chatting with his fellow guard, checking for lint, etc. If the player shoots at him, he shouldn’t even consider any of those actions

-

The most straightforward way to solve this is with bucketing, also known as dual utility AI [Dill 11]. All actions are categorized into buckets and each bucket is given a weight. The higher priority buckets are always processed first.

-

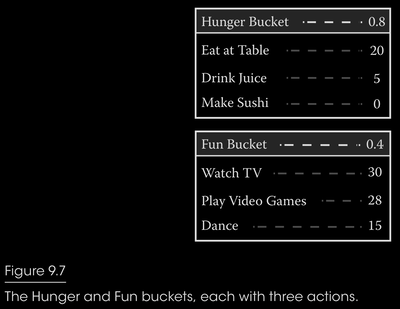

In Figure 9.7, you can see that there are two buckets, one for Hunger and one for Fun. Hunger has scored 0.8 while Fun has scored 0.4. The Sim will walk through all possible actions in the Hunger bucket and, assuming any of those actions are valid, will choose one. The Sim will not consider anything in the Fun bucket

page 122:

-

The buckets themselves are scored based on a response curve created by designers.

-

One issue that’s worth bringing up in any AI system is the concept of inertia. If your AI agent is attempting to decide something every frame, it’s possible to run into oscillation issues, especially if you have two things that are scored similarly. For example, say you have FPS where the AI realizes it’s in a bad spot. The enemy soldier starts scoring both “attack the player” and “run away” at 0.5. If the AI was making a new decision every frame, it is possible that they would start appearing very frantic. The AI might shoot the player a couple times, start to run away, then shoot again, then repeat. Oscillations in behavior such as this look very bad.

-

One solution is to add a weight to any action that you are already currently engaged in. This will cause the AI to tend to remain committed until something truly better comes along. Another solution is to use cooldowns. Once an AI agent makes a decision, they enter a cooldown stage where the weighting for remaining in that action is extremely high. This weight can revert at the end of the cooldown period, or it can gradually drop as well. Another solution is to stall making another decision—either for a period of time or until such time as the current action is finished. This really depends on the type of game you’re making and how your decision/action process works, however. On The Sims Medieval, a Sim would only attempt to make a decision when their interaction queue was empty. Once they chose an action, they would commit to performing that action. Once the Sim completed (or failed to complete) their action, they would choose a new action.

page 123:

- When making decisions, the AI considers four basic factors. The first is a desire to attack, which is based on a tuned value that scales linearly as it becomes possible to kill the player in a single hit. This causes the actor to get more aggressive during the end-game and take more risks, as shown in Equation 9.5. This is a good example of a range-bound linear curve. The value of a in the equation is the tuned aggression of the actor, which is the default score.

$$U = max\Biggl( min\biggl( \Bigl( 1-\frac{hp-minDmg}{maxDmg-minDmg} \Bigl) \times(1-a)+a,1 \biggl),0 \Biggl)$$

-

Figure 9.8 shows the resulting curve from Equation 9.5 where a is set to 0.6.

-

The second decision factor is the threat. This is a curve that measures what percentage of the actor’s current hit points will be taken away if the player hits for maximum damage. It has a shape similar to a quadratic curve and is generated with Equation 9.6.

$$U = min \empheqlparen \frac{maxDmg}{hp} , 1 \empheqrparen$$

-

Figure 9.9 shows the resulting curve for Threat.

-

The third decision factor is the actor’s desire for health. This uses a variation of the logistics function in Equation 9.4. As the actor’s hit points are reduced, its desire to heal will rise. Equation 9.7 shows the formula for this decision factor.

$$U = 1 - \frac{1}{1+(e\times0.68)^{-\empheqlparen\frac{hp}{maxHp}\times12\empheqrparen+6}}$$

- The resulting curve is a nice, smooth, sigmoid curve, which is shown in Figure 9.10. Note the addition of +6 to the exponent. This is what pushes the curve over to the positive x-axis rather than centering around 0.

page 124:

- The final decision factor is the desire to run away. This is a quadratic curve with a steepness based on the number of potions the agent has. If the agent has several potions, the likelihood of running away is extremely small. If the agent has none, this desire grows much faster. Equation 9.8 shows the formula for the run desire.

$$U=1-\empheqlparen\frac{hp}{maxHp}\empheqrparen^{\frac{1}{(p+1)^4} \times 0.25}$$

- The curve itself is dependent on the value of $p$, which is the number of potions the actor has left. Figure 9.11 shows various curves for various values of $p$.

page 126:

-

References

-

[Dill 11] K. Dill. “A game AI approach to autonomous control of virtual characters.” Interservice/ Industry Training, Simulation, and Education Conference, 2011, pp. 4–5. Available online (http://www.iitsec.org/about/PublicationsProceedings/Documents/11136_Paper.pdf).

-

[Mark 09] D. Mark. Behavioral Mathematics for Game AI. Reading, MA: Charles River Media, 2009, pp 229–240.

-

[Russell et al. 09] S. Russell and P. Norvig. Artificial Intelligence: A Modern Approach. Reading, MA: Prentice Hall, 2009, pp. 480–509.

· Chapter 10 - Building Utility Decisions into Your Existing Behavior Tree

- By: Bill Merrill

page 129:

-

In a standard behavior tree, priority is static. It is baked right into the tree. The simplicity is welcome, but in practice it can be frustratingly limiting. The same behavior may require different relative priorities, depending on the context. Ensuring our Monster Hunter’s primary weapon has a full clip should always be a consideration, even if we’re casually patrolling the jungle. But if we’re engaged with a savage monster, it’s absolutely necessary that we continue to deal damage. Behavior tree authors often deal with this conundrum by duplicating sections of the tree at different branches, with different conditions and/or priorities. Even with slick sub-tree instancing or referencing, this still becomes inefficient, verbose, and potentially fragile.

-

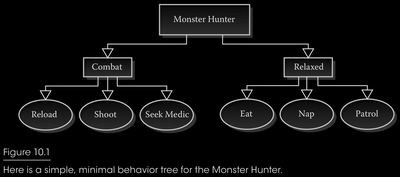

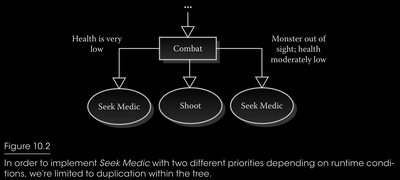

Decisions are rarely binary, and many behaviors simply do not have priorities we can comfortably establish offline. Let’s start with a simple example behavior tree (Figure 10.1). Having no ability to shoot is a precondition for the Seek Medic behavior, forcing us to duplicate the behavior, as seen in Figure 10.2. We could start by giving Seek Medic stricter conditions and prioritizing it over Shoot, but this will likely create the opposite problem where the Monster Hunter immediately takes the Seek Medic action the instant conditions pass. This is the sort of fundamental problem we want to address with the integration of utility.

page 131:

- if our Monster Hunter is low on health and wishes to consider rendezvousing with the squad’s medic for a health boost, we can measure the benefits of receiving treatment in health points gained. However, running frantically to a safe position is likely to gain the attention of the alien beast, putting us at a risk. If we can measure the risk by predicting the health we’re likely to lose in transit, both inputs are now in terms of health points and can be combined and/or compared directly, as in Equation (10.1). We could simply take their sum, and if the net value is positive, taking this action has some benefit we can weigh against other actions.

$$RawUtility = HealthGained – HealthLost$$

- More desirably, by attaching more weight to the amount of health we’ll lose in transit, we can ensure that we only take this action if we expect to net a significant amount of health, as seen in Equation (10.2). After all, breaking even would be a waste of the time we could’ve otherwise spent slaying the creature. We also want a high degree of confidence that, even if our predictions were overly optimistic, we’re unlikely to end up with a net loss in health and looking rather boneheaded as a result. Naturally there’s more we could do, such as apply an exponential scale to HealthLost, which causes the utility to fall off more rapidly as the risk grows, as in Equation (10.3).

$$Value = HealthGained – (HealthLost × 2.0)$$

$$Value = HealthGained – (pow(HealthLost,1.2))$$

- What happens if we’re unable to represent our input values in such easily relatable units, and we wish to consider much more than just a net change in health? One way to combat this scenario is to combine the various influences into higher-level, more abstract values such as “Morale,” “Threat,” etc. The utility of running to visit our medic could also take into consideration the lost time we could’ve otherwise spent damaging the monster. Specifically, we could take our formula above, normalize the result, and classify it as a “Heal” factor. Next, we could generate a second formula representing this time lost, normalize it, and classify it as “Delay.” We now have two normalized quantities representing higher-level valuations, which we can combine into a final utility value.

$$Utility = \frac{Heal\times HealPower - Delay \times DelayPower}{HealPower + DelayPower}$$

page 132:

-

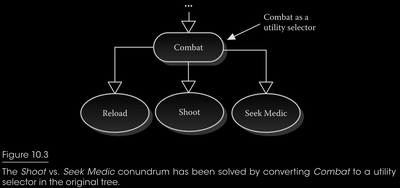

The tree already features a component for selecting which branches are taken during execution, namely the selector. To introduce utility-based selection, we’ll simply create a new specialized type of selector that considers not just the binary validity of its children, but their relative utility as well. We’ll cleverly dub the new node type the utility selector.

-

we can address our problem with Seek Medic by switching Combat to a utility selector, as we’ve done in Figure 10.3.

page 134:

- a utility selector must expand all nodes in its child sub-trees, potentially conducting large quantities of utility calculations in a single pass. This may or may not be a problem depending on the scale you’re working with, but with complex utility calculations in large behavior trees on platforms sensitive to random memory access patterns, it’s certainly not ideal. Thankfully, there are ways to mitigate this problem. For one, we could limit utility calculations to some interval within our leaf behaviors’ implementations, and return cached values. Alternatively, we could compute utility values for all of our tree’s leaf nodes within a completely separate pass, with its own load balancing, leaving only cached values to be used during calls to CalculateUtility().

· Chapter 11 - Reactivity and Deliberation in Decision-Making Systems

- By: Carle Côté

page 137:

- Reactivity is about the ability of an agent to be responsive when stimuli are perceived in its environment, while deliberation is about the ability of an agent to make decisions and engage consequent actions.

page 138:

- an agent needs to gather information from its environment (Sense), use the collected information in some decision process to decide what to do next (Think), engage new actions accordingly (Act), and repeat these steps over and over to create autonomy.

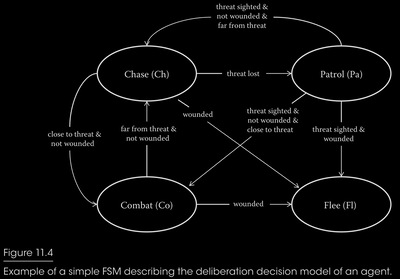

page 140:

page 141:

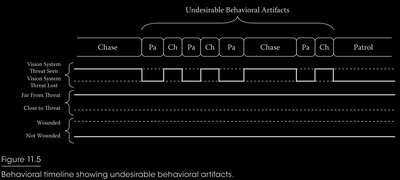

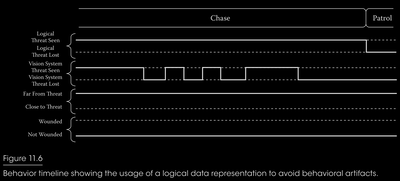

- By looking at the timeline, we can observe a lot of transitions in the behavior track. They represent the agent changing its stance from running at the threat to a slow-paced patrol stance multiple times within a couple of seconds because of the vision system losing direct line of sight with the threat. From the player’s perspective, the behavior transitions would seem off, and they would most likely be judged as undesirable behavioral artifacts caused for no apparent reason. This is without mentioning that the animation system might not even be responsive enough to execute these fast stance transitions without creating animation popping artifacts. This is a good example to show where responsiveness isn’t the only criterion that needs to be considered by deliberation decision models; sustaining actions for the proper amount of time is also crucial to delivering believable behaviors. In this case, we can solve this issue by hooking the transition’s threat-sighted symbol to a logical representation of seeing/losing a threat in a chase that would include some form of filtering (using hysteresis algorithms or other similar methods) to avoid creating undesirable oscillations. Figure 11.6 shows an ideal version of the timeline resulting from that logical representation.

page 142:

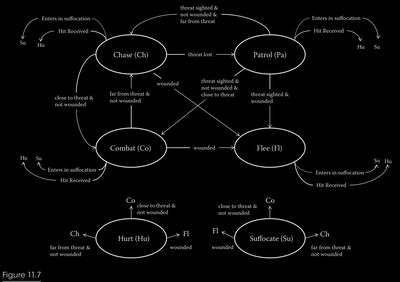

- Figure 11.7 represents the same FSM example presented in Section 11.3.1 but including two reaction states: Hurt and Suffocate. Because Chase, Patrol, Combat, and Flee are deliberation states that are designed to be active as long as possible, they are susceptible to be interrupted at any time. This explains why, in Figure 11.7, we can see that every deliberation state has transitions to every reaction state. Consequently, adding new reaction states to the model would require new transitions from all of the existing deliberation states. The same applies when adding new deliberation states to the model. With increased complexity, it’s easy to see that the model will be hard to understand and maintain mostly because it tries to mix two very different kinds of transition dynamics within the same model. To solve this issue, it would be interesting to consider using multiple decision models that can interact together.

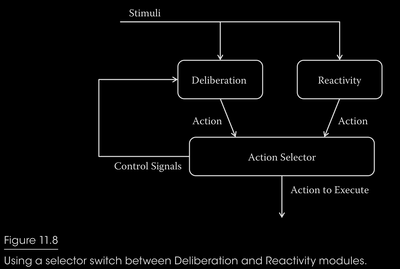

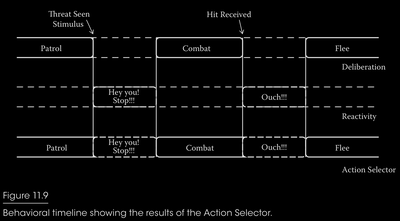

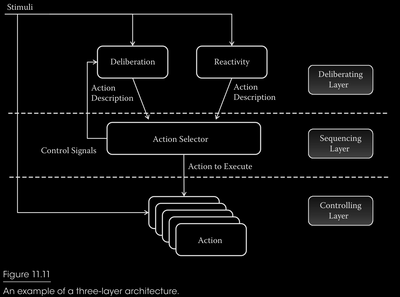

page 143:

- It is possible to avoid the limitation of using only one decision model. Figure 11.8 shows an architectural solution allowing multiple decision models. The design principle is pretty simple: create a module (Action Selector) responsible to act as a selector switch between Deliberation and Reactivity modules. With this architectural solution, Deliberation and Reactivity modules can use their own decision models as long as they can both receive the same stimuli and output their respective set of actions. For example, the Deliberation module could use the FSM presented in Figure 11.4, while the Reactivity module could use a very simple set of rules or a decision tree to evaluate which reaction should be requested according to perceived stimuli. As for the implementation of the Action Selector itself, it can also be done with its own decision model as long as it’s able to signal the Deliberation module when a new decision must be taken or to cancel the current deliberate action in order to execute a reaction. Figure 11.9 shows the resulting timeline.

page 146:

· Chapter 12 - Exploring HTN Planners through Example

- By: Troy Humphreys

page 149:

- hierarchical task networks (HTN)

page 153:

- In our previous example, using the tree trunk as a melee weapon and throwing boulders are both methods to the AttackEnemy compound task. The conditions in which we decide which method to use depend on whether the troll has a tree trunk or not. Here is an example of the AttackEnemy task using the notation above.

Compound Task [AttackEnemy]

Method 0 [WsHasTreeTrunk == true]

Subtasks [NavigateTo(EnemyLoc), DoTrunkSlam()]

Method 1 [WsHasTreeTrunk == false]

Subtasks [LiftBoulderFromGround(), ThrowBoulderAt(EnemyLoc)]

page 154:

-

By understanding how compound tasks work, it’s easy to imagine how we could have a large hierarchy that may start with a BeTrunkThumper compound task that is broken down into sets of smaller tasks—each of which are then broken into smaller tasks, and so on. This is how HTN forms a hierarchy that describes how our troll NPC is going to behave. It’s important to understand that compound tasks are really just containers for a set of methods that represent different ways to accomplish some high level task. There is no compound task code running during plan execution.

-

A domain is the term used to describe the entire task hierarchy.

-

We start with a compound task called BeTrunkThumper. This root task encapsulates the “main idea” of what it means to be a Trunk Thumper.

Compound Task [BeTrunkThumper]

Method [WsCanSeeEnemy == true]

Subtasks [NavigateToEnemy(), DoTrunkSlam()]

Method [true]

Subtasks [ChooseBridgeToCheck(), NavigateToBridge(), CheckBridge()]

- As you can see with this root compound task, the first method defines the troll’s highest priority. If he can see the enemy, he will navigate using NavigateToEnemy task and attack his enemy with the DoTrunkSlam task. If not, he will fall to the next method. This next method will run three tasks; choose the next bridge to check, navigate to that bridge, and check the bridge for enemies. Let’s take a look at the primitive tasks that make up these methods and the rest of the domain.

Primitive Task [DoTrunkSlam]

Operator [AnimatedAttackOperator(TrunkSlamAnimName)]

Primitive Task [NavigateToEnemy]

Operator [NavigateToOperator(EnemyLocRef)]

Effects [WsLocation = EnemyLocRef]

Primitive Task [ChooseBridgeToCheck]

Operator [ChooseBridgeToCheckOperator]

Primitive Task [NavigateToBridge]

Operator [NavigateToOperator(NextBridgeLocRef)]

Effects [WsLocation = NextBridgeLocRef]

Primitive Task [CheckBridge]

Operator [CheckBridgeOperator(SearchAnimName)]

page 155:

-

With a domain made up of compound and primitive tasks, we are starting to form an image of how these are put together to represent an NPC. Combine that with the world state and we can talk about the work horse of our HTN, the planner.

-

There are three conditions that will force the planner to find a new plan: the NPC finishes or fails the current plan, the NPC does not have a plan, or the NPC’s world state changes via a sensor.

-

To do this, the planner starts with a root compound task that represents the problem domain in which we are trying to plan for. Using our earlier example, this root task would be the BeTrunkThumper task. This root task is pushed onto the TasksToProcess stack. Next, the planner creates a copy of the world state. The planner will be modifying this working world state to “ simulate” what will happen as tasks are executed. After these initialization steps are taken, the planner begins to iterate on the tasks to process. On each iteration, the planner pops the next task off the TasksToProcess stack. If it is a compound task, the planner tries to decompose it—first, by searching through its methods looking for the first set of conditions that are valid. If a method is found, that method’s subtasks are added on to the TaskToProcess stack. If a valid method is not found, the planner’s state is rolled back to the last compound task that was decomposed.

page 156:

- As you might have realized, the planner uses a depth-first search to find a valid plan. This does mean that you may have to explore the whole domain to find a valid plan. However, it’s important to remember that you are traversing a hierarchy of tasks. This hierarchy allows the planner to cull large sections of the network via the compound task’s methods. Because we aren’t using a heuristic or cost—such as with A* and Dijkstra searches—we can skip any kind of sorting.

page 157:

- After seeing our troll in game, the designers think that the tree trunk attack is a little over- powered. They suggest that the trunk breaks after three attacks, forcing the troll to search for another one. First we can add the property WsTrunkHealth to the world state.

Compound Task [BeTrunkThumper]

Method [ WsCanSeeEnemy == true]

Subtasks [AttackEnemy()]// using the new compound task

Method [true]

Subtasks [ChooseBridgeToCheck(), NavigateToBridge(), CheckBridge()]

Compound Task [AttackEnemy]//new compound task

Method [WsTrunkHealth > 0]

Subtasks [NavigateToEnemy(), DoTrunkSlam()]

Method [true]

Subtasks [FindTrunk(), NavigateToTrunk(), UprootTrunk(), AttackEnemy()]

Primitive Task [DoTrunkSlam]

Operator [DoTrunkSlamOperator]

Effects [WsTrunkHealth += -1]

Primitive Task [UprootTrunk]

Operator [UprootTrunkOperator]

Effects [WsTrunkHealth = 3]

Primitive Task [NavigateToTrunk]

Operator [NavigateToOperator(FoundTrunk)]

Effects [WsLocation = FoundTrunk]

page 159:

- The designer asks you to implement a behavior that will chase after the enemy and react once he sees the enemy again. Let’s look at the changes we could make to the domain to handle this issue.

Compound Task [BeTrunkThumper]

Method [ WsCanSeeEnemy == true]

Subtasks [AttackEnemy()]

Method [ WsHasSeenEnemyRecently == true]//New method

Subtasks [NavToLastEnemyLoc(), RegainLOSRoar()]

Method [true]

Subtasks [ChooseBridgeToCheck(), NavigateToBridge(), CheckBridge()]

Primitive Task [NavToLastEnemyLoc]

Operator [NavigateToOperator(LastEnemyLocation)]

Effects [WsLocation = LastEnemyLocation]

Primitive Task [RegainLOSRoar]

Preconditions[WsCanSeeEnemy == true]

Operator [RegainLOSRoar()]

- Expected effects are effects that get applied to the world state only during planning and plan validation. The idea here is that you can express changes in the world state that should happen based on tasks being executed. This allows the planner to keep planning farther into the future based on what it believes will be accomplished along the way.

Primitive Task [NavToLastEnemyLoc]

Operator [NavigateToOperator(LastEnemyLocation)]

Effects [WsLocation = LastEnemyLocation]

ExpectedEffects [WsCanSeeEnemy = true]

- Now when this task gets popped off the decomposition list, the working world state will get updated with the expected effect and the RegainLOSRoar task will be allowed to proceed with adding tasks to the chain. This simple behavior could have been implemented a couple of different ways, but expected effects came in handy more than a few times during the development of Transformers: Fall of Cybertron. They are a simple way to be just a little more expressive in a HTN domain.

page 160:

- To this point, we have been decomposing compound tasks based on the order of the task’s methods. This tends to be a natural way of going about our search, but consider these attack changes to our Trunk Thumper domain.

Compound Task [AttackEnemy]

Method [WsTrunkHealth > 0, AttackedRecently == false, CanNavigateToEnemy == true]

Subtasks [NavigateToEnemy(), DoTrunkSlam(), RecoveryRoar()]

Method [WsTrunkHealth == 0]

Subtasks [FindTrunk(), NavigateToTrunk(), UprootTrunk(), AttackEnemy()]

Method [true]

Subtasks [PickupBoulder(), ThrowBoulder()]

Primitive Task [DoTrunkSlam]

Operator [DoTrunkSlamOperator]

Effects [WsTrunkHealth += -1, AttackedRecently = true]

Primitive Task [RecoveryRoar]

Operator [PlayAnimation(TrunkSlamRecoverAnim)]

Primitive Task [PickupBoulder]

Operator [PickupBoulder()]

Primitive Task [ThrowBoulder]

Operator [ThrowBoulder()]

page 165:

-

Partial planning is one of the most powerful features of HTN. In simplest terms, it allows the planner the ability to not fully decompose a complete plan. HTN is able to do this because it uses forward decomposition or forward search to find plans. That is, the planner starts with the current world state and plans forward in time from that. This allows the planner to only plan ahead a few steps.

-

GOAP and STRIPS planner variants, on the other hand, use a backward search [Jorkin 04]. This means the search makes its way from a desired goal state toward the current world state. Searching this way means the planner has to complete the entire search in order to know what first step to take.

· Chapter 13 - Hierarchical Plan-Space Planning for Multi-unit Combat Maneuvers

- By: William van der Sterren

page 181:

- A third way to consider fewer plans is the hierarchical plan-space planner’s ability to plan from the “middle-out.” In the military, planning specialists mix forward planning and reverse planning, sometimes starting with the critical step in the middle. When starting in the middle (for example, with the air landing or a complex attack), they subsequently plan forward to mission completion and backward to mission start. The military do so because starting with the critical step drastically reduces the number of planning options to consider.

· Chapter 14 - Phenomenal AI Level-of-Detail Control with the LOD Trader

- By: Ben Sunshine-Hill

page 188:

-

BIR = Break in Reality

-

An unrealistic state (US) BIR is the most immediate and obvious type of BIR, where a character’s immediately observable simulation is wrong. A character eating from an empty plate, or running in place against a wall

-

A fundamental discontinuity (FD) BIR is a little more subtle, but not by much: it occurs when a character’s current state is incompatible with the player’s memory of his past state. A character disappearing while momentarily around a corner, or having been frozen in place for hours while the player was away

page 189:

-

An unrealistic long-term behavior (ULTB) BIR is the subtlest: It occurs only when an extended period of observation reveals problems with a character’s behavior. A character wandering randomly instead of having goal-driven behaviors

-

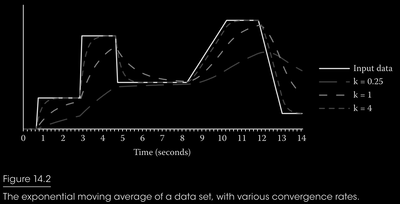

a tool which will be used in a lot of them: the exponential moving average (EMA). The EMA is a method for smoothing and averaging an ongoing sequence of measurements. Given an input function $F(t)$ we produce the output function $G(t)$. We initialize $(0) = F(0)$, and then at each time $t$ we update $G(t)$ as $G(t) = (1 - \alpha)F(t)+ \alpha G(t- \Delta t)$ , where Δt is the timestep since the last measurement. The $\alpha$ in that equation is calculated as $\alpha = e^{-k \Delta t}$, where $k$ is the convergence rate (higher values lead to faster changes in the average). You can tune $k$ to change the smoothness of the EMA, and how closely it tracks the input function. We’re going to use the EMA a lot in these models, so it’s a good idea to familiarize yourself with it (Figure 14.2).

· Chapter 15 - Runtime Compiled C++ for Rapid AI Development

- By: Doug Binks, Matthew Jack, and Will Wilson

· Chapter 16 - Plumbing the Forbidden Depths Scripting and AI

- By: Mike Lewis

page 220:

-

The most successful approaches to scripting will generally fall into one of two camps. First is the “scripts as master” perspective, wherein scripts control the high-level aspects of agent decision making and planning. The other method sees “scripts as servant,” where some other architecture controls the overall activity of agents, but selectively deploys scripts to attain specific design goals or create certain dramatic effects.

-

In general, master-style systems work best in one of two scenarios. In the optimal case, a library of ready-to-use tools already exists, and scripting can become the “glue” that combines these techniques into a coherent and powerful overarching model for agent behavior.

-

servant scripts are most effective when design requires a high degree of specificity in agent behavior. This is the typical sense in which interactions are thought of as “scripted”; a set of possible scenarios is envisioned by the designers, and special-case logic for reacting to each scenario is put in place by the AI implementation team. Servant scripts need not be entirely reactive, however; simple scripted loops and behavioral patterns can make for excellent ambient or “background” AI.

page 232:

-

Last, but certainly not least, integrated architectures provide an illustrative method for writing almost any large-scale code. The layered approach has been heavily encouraged for decades, with notable proponents including Fred Brooks and the SICP course from MIT. Learning to structure code in this way can be a powerful force multiplier for creating clean, well separated modules for the rest of the project, even well outside the scope of AI systems.

-

1986 MIT course: https://www.youtube.com/playlist?list=PL8FE88AA54363BC46

page 233:

- The chief problem with overusing scripting is combinatorial explosion.

page 236:

-

When using scripting, this often simply boils down to keeping a trace log of the steps that have been performed by the agent, and, where applicable, what branches have been selected and how often loops have been repeated. Being able to select an agent and view a debug listing of its complete script state is also an invaluable tool.

-

Scripts should be seen as a sort of glue that attaches various decision-making, planning, and knowledge representation systems into a cohesive and powerful whole.

Part 3 - Movement and Pathfinding

· Chapter 17 - Pathfinding Architecture Optimizations

- By: Steve Rabin and Nathan R. Sturtevant

page 242:

-

A* and pathfinding

-

Precompute Every Single Path (Roy–Floyd–Warshall) While at first glance it seems ridiculous, it is possible to precompute every single path in a search space and store it in a look-up table. The memory implications are severe, but there are ways to temper the memory requirements and make it work for games. The algorithm is known in English-speaking circles as the Floyd–Warshall algorithm, while in Europe it is better known as Roy–Floyd.

-

Roy–Floyd–Warshall is the absolute fastest way to generate a path at runtime. It should routinely be an order of magnitude faster than the best A* implementation.

-

The look-up table is calculated offline before the game ships.

-

The look-up table requires $O(n^2)$ entries, where n is the number of nodes. For example, for a 100 by 100 grid search space, there are 10,000 nodes. Therefore, the memory required for the look-up table would be 100,000,000 entries (with 2 bytes per entry, this would be ~200 MB).

-

Path generation is as simple as looking up the answer. The time complexity is $O(p)$, where p is the number of nodes in the final path.

page 243:

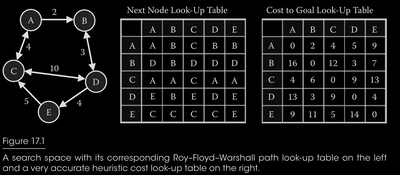

- Figure 17.1 shows a search space graph and the resulting tables generated by the Roy–Floyd–Warshall algorithm. A full path is found by consecutively looking up the next step in the path (left table in Figure 17.1). For example, if you want to find a final path from B to A, you would first look up the entry for (B, A), which is node D. You would travel to node D, then look up the next step of the path (D, A), which would be node E. By repeating this all the way to node A, you will travel the optimal path with an absolute minimum amount of CPU work. If there are dynamic obstacles in the map which must be avoided, this approach can be used as a very accurate heuristic estimate, provided that distances are stored in the look-up table instead of the next node to travel to (right table in Figure 17.1).

- As we mentioned earlier, in games you can make the memory requirement more reasonable by creating minimum node networks that are connected to each other [Waveren 01, van der Sterren 04]. For example if you have 1000 total nodes in your level, this would normally require 10002 = 1,000,000 entries in a table. But if you can create 50 node zones of 20 nodes each, then the total number of entries required is 50 × 202 = 20,000 (which is 50 times fewer entries).

page 244:

-

Another approach to reducing the memory requirement is to compress the Roy–Floyd–Warshall data. Published work [Botea 11] has shown the effectiveness of compressing the data, and this approach fared very well in the 2012 Grid-Based Path Planning competition (http://www.movingai.com/GPPC/), when sufficient memory was available.

-

An alternate way to compress the Roy–Floyd–Warshall data is to take advantage of the structure of the environment. In many maps, but not all maps, there are relatively few optimal paths of significant length through the state space, and most of these paths overlap. Thus, it is possible to find a sparse number of “transit nodes” through which optimal paths cross [Bast et al. 07]. If, for every state in the state space, we store the path to all transit nodes for that state, as well as the optimal paths between all transit nodes, we can easily reconstruct the shortest path information between any two states, using much less space than when storing the shortest path between all pairs of states. This is one of several methods which have been shown to be highly effective on highway road maps [Abraham et al. 10].

-

The full Roy–Floyd–Warshall data results in very fast pathfinding queries, at the cost of memory overhead. In many cases you might want to use less memory and more CPU, which suggests building strong, but not perfect heuristics.

-

Imagine if we store just a few rows/columns of the Roy–Floyd–Warshall data. This corresponds to keeping the shortest paths from a few select nodes. Fortunately, improved distance estimates between all nodes can be inferred from this data. If $d(x, y)$ is the distance between node x and y, and we know $d(p, z)$ for all $z$, then the estimated distance between $x$ and $y$ is $h(x, y) = |d(p, x) – d(p, y)|$, where $p$ is a pivot node that corresponds to a single row/column in the Roy–Floyd–Warshall data. With multiple pivot nodes, we can perform multiple heuristic lookups and take the maximum. The improved estimates will reduce the cost of A* search. This approach has been developed in many contexts and been given many different names [Ng and Zhang 01, Goldberg and Harrelson 05, Goldenberg et al. 11, Rayner et al. 11]. We prefer the name Euclidean embedding, which we will justify shortly. First, we summarize the facts about this approach:

-

Euclidean embeddings can be far more accurate than the default heuristics for a map, and in some maps are nearly as fast as Roy-Floyd-Warshall.

-

The look-up table can be calculated before the game ships or at runtime, depending on the size and dynamic nature of the maps.

-

The heuristic requires $O(kn)$ entries, where $n$ is the number of nodes and $k$ is the number of pivots.

-

Euclidean embeddings provide a heuristic for guiding A* search. Given multiple heuristics, A* should usually take the maximum of all available heuristics.

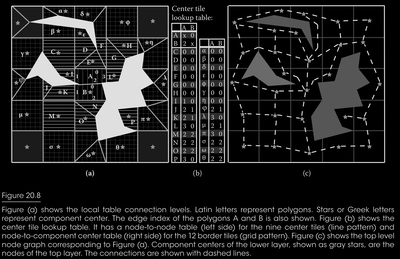

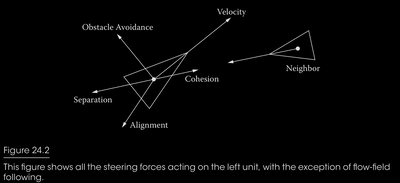

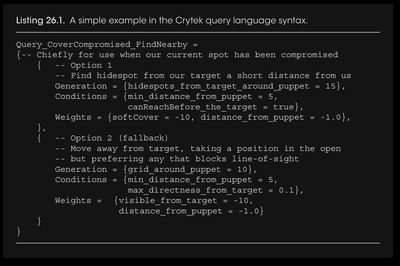

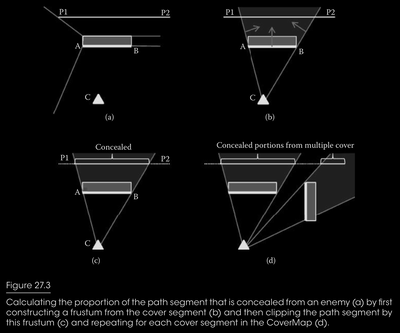

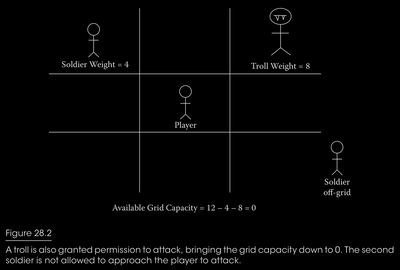

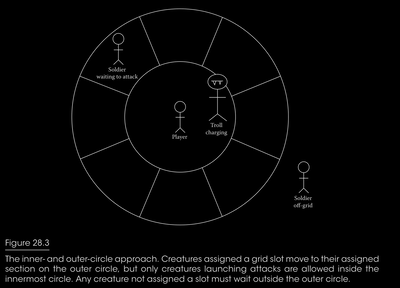

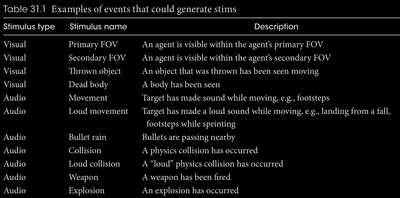

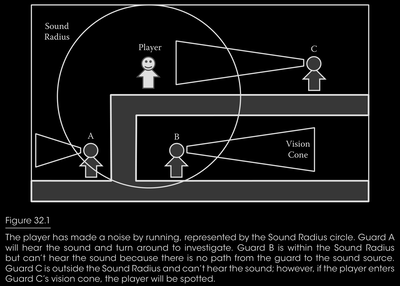

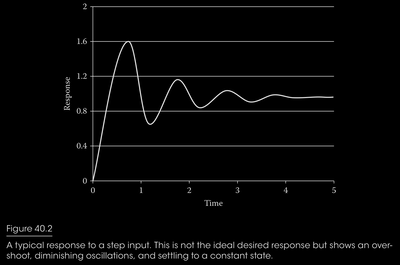

-