Home · Projects · 2017 · Custom Traffic Parser and Plotter

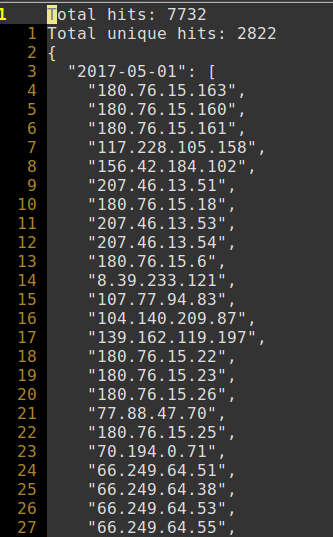

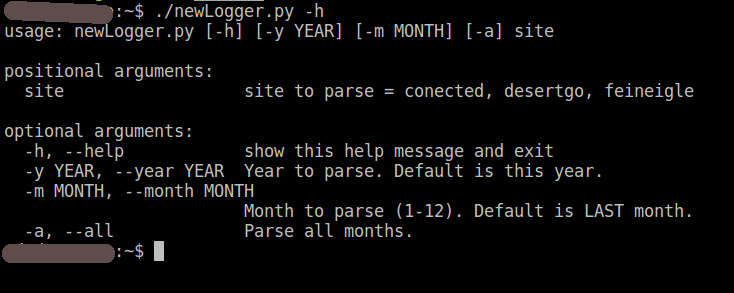

I wanted a simple way to output some bar graphs showing how many hits each of my sites was getting every month. Even though there are tons of off the shelf solutions, I, of course, had to roll my own. I started by writing Parser.py , which reads the apache logs for a given site/month and outputs a JSON file. This JSON file has a “header” that lists the total and unique hits for that month, followed by a normal JSON formatted daily listing of every unique IP. This is scheduled to run the first of every month via cron. These files are much smaller than the corresponding apache files, but of course do not contain nearly as much information.

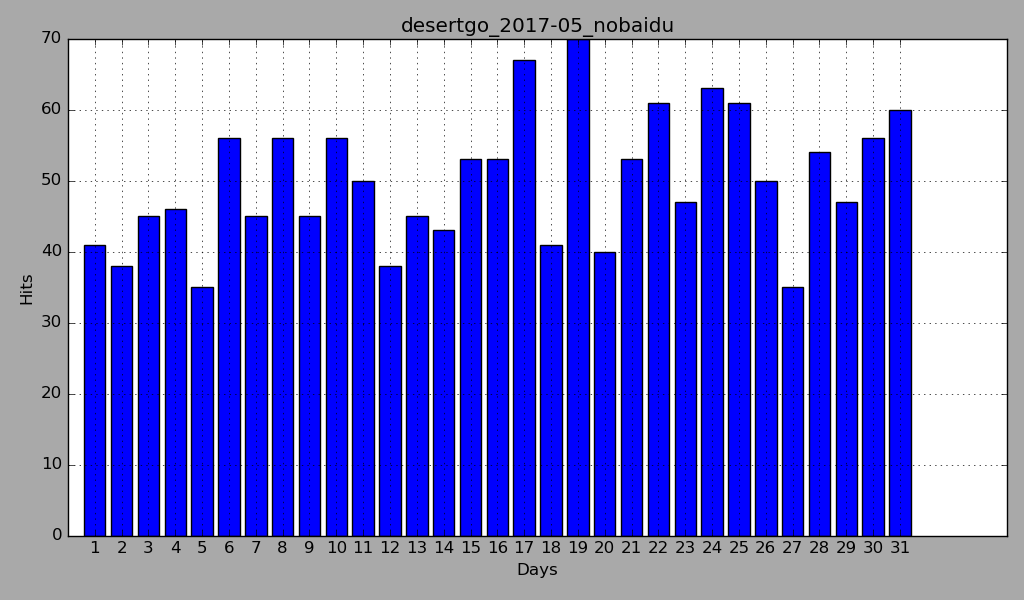

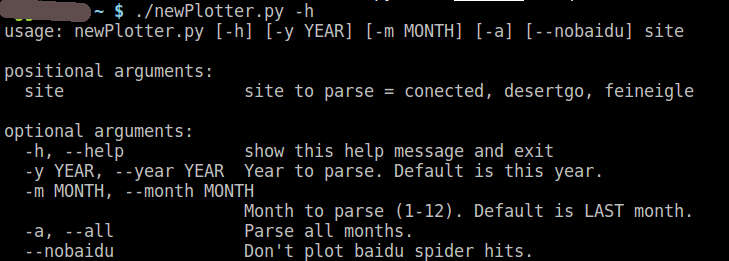

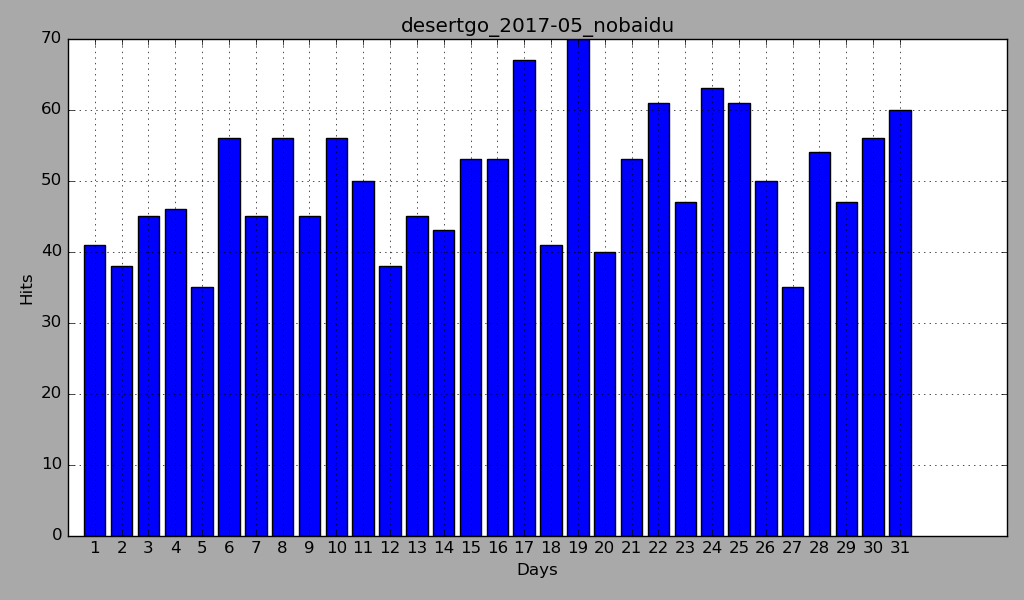

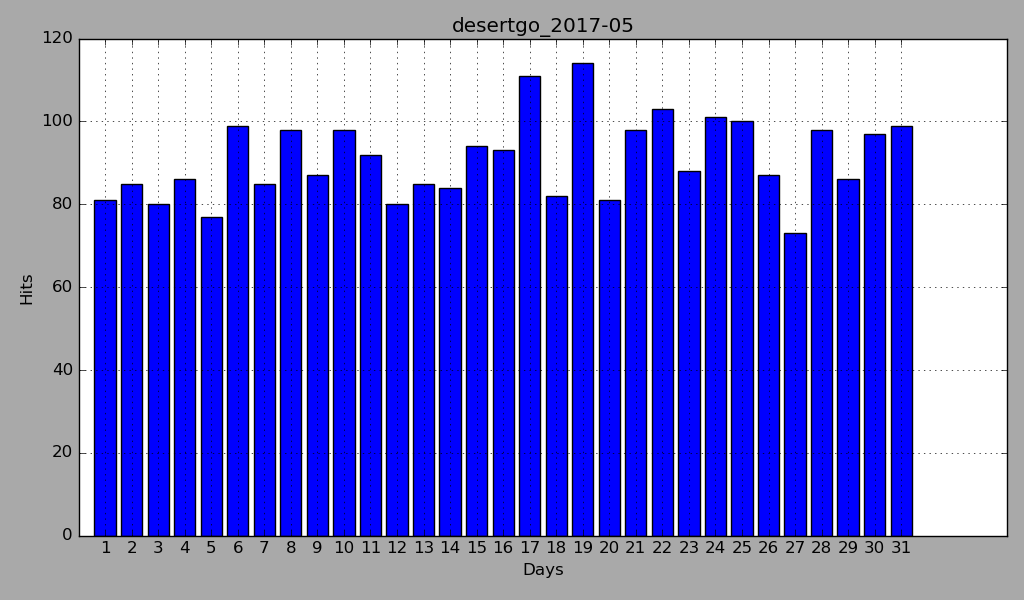

Next, I wrote Plotter.py to read these JSON files and output the aforementioned bar graph via matplotlib. I added an option, –nobaidu, to strip out all of the traffic coming from 180.76.15.xxx. Baidu’s spider is relentless, weighing in at ~40 crawls per day. This is easily extendible to any given domain. You could even add an option that takes the IP range to exclude from the CLI.

#!/usr/bin/env python

#Author: Mark Feineigle

#Create Date: 2017/06/23

import argparse

from collections import defaultdict, OrderedDict

from commands import getoutput

from datetime import datetime as dt

import json

import os

import sys

parser = argparse.ArgumentParser()

parser.add_argument("site", help="""site to parse = conected,

desertgo,

feineigle""")

parser.add_argument("-y", "--year", default=str(dt.now().year),

help="""Year to parse.

Default is this year.""")

parser.add_argument("-m", "--month", default=str(dt.now().month-1),

help="""Month to parse (1-12).

Default is LAST month.""")

parser.add_argument("-a", "--all", action="store_true",

help="Parse all months.")

args = parser.parse_args()

# scans the whole shebang of logs for a given site

def extract_all_logs(logPath):

ips = []

for filename in os.listdir(logPath):

if filename.startswith("access"):

if "gz" not in filename:

ips.append(getoutput("cat "+logPath+filename+" | awk {'print $1 $4'}"))

else:

ips.append(getoutput("gunzip -c "+logPath+filename+" | awk {'print $1 $4'}"))

# reformat the logs into a list:

# ['2017-04-25 - 100.43.90.9', '2017-04-25 - 100.43.90.9', ...]

ips = "\n".join(ips)

ips = ips.split("\n")

ips = [ip.split("[") for ip in ips if len(ip) > 1]

ips = [dt.strftime(dt.strptime(date,"%d/%b/%Y:%H:%M:%S"),"%Y-%m-%d")+" - "+ip

for ip, date in ips]

return ips

def extract_month_from(month, ips):

# sort out the month in question

dd = defaultdict(set)

tot_mo_hits = 0

for line in ips:

if line.startswith(year+"-"+month):

tot_mo_hits += 1

date , ip = line.strip().split(" - ")

dd[date].add(ip)

# order the month by day

od = OrderedDict()

for key, val in sorted(dd.items()):

od[key] = list(val)

data = json.dumps(od, indent=2)

tot_mo_unique = sum([len(day) for day in od.values()])

return (data, tot_mo_hits, tot_mo_unique)

def write_month_log(outPath, data, tot_mo_hits, tot_mo_unique):

with open(outPath, "w") as fi:

fi.write("Total hits: "+str(tot_mo_hits)+"\n")

fi.write("Total unique hits: "+str(tot_mo_unique)+"\n")

fi.write(data)

if __name__ == "__main__":

site = args.site #desertgo, feineigle, conected

year = args.year

months = [args.month.zfill(2)] #pad with zeros if needed

logPath = "/var/log/apache2/"+site+"/"

archivePath = "/var/log/apache2/archive/"+site

if not os.path.isdir(archivePath):

os.mkdir(archivePath)

# Loop for extraqcting all months at once (-a argument)

if args.all == True:

months = [str(m).zfill(2) for m in range(1,13)]

for month in months:

outPath = os.path.join(archivePath, site+"_"+year+"-"+month+".txt")

ips = extract_all_logs(logPath)

data, tot_mo_hits, tot_mo_unique = extract_month_from(month, ips)

if len(data) > 2:

write_month_log(outPath, data, tot_mo_hits, tot_mo_unique)

Source: Parser.py

#!/usr/bin/env python

#Author: Mark Feineigle

#Create Date: 2017/06/24

import argparse

from datetime import datetime as dt

import json

import matplotlib.pyplot as plt

import os

import sys

parser = argparse.ArgumentParser()

parser.add_argument("site", help="""site to parse = conected,

desertgo,

feineigle""")

parser.add_argument("-y", "--year", default=str(dt.now().year),

help="""Year to parse.

Default is this year.""")

parser.add_argument("-m", "--month", default=str(dt.now().month-1),

help="""Month to parse (1-12).

Default is LAST month.""")

parser.add_argument("-a", "--all", action="store_true",

help="Parse all months.")

parser.add_argument("--nobaidu", action="store_true",

help="Don't plot baidu spider hits.")

args = parser.parse_args()

def read_json_log(logPath):

with open(logPath, "r") as fi:

data = fi.read()

data = data[data.find("{"):] #cut off the first 2 lines/header (hits)

data = json.loads(data)

return data

def parse_json(data):

xs, ys = [], []

for key, val in sorted(data.items()):

xs.append(float(key[-2:])) #key/xs = days

if args.nobaidu == True:

val = [v for v in val if not v.startswith("180.76.15.")]

ys.append(float(len(val))) #val/ys = hits

return (xs, ys)

def construct_plot(xs, ys, site, filename):

if args.nobaidu == True:

filename = filename+"_nobaidu"

plt.figure(figsize=(10.24,6))

plt.bar(xs, ys, align="center")

plt.xticks(xs)

plt.title(filename)

plt.xlabel("Days")

plt.ylabel("Hits")

plt.grid(True)

plt.tight_layout(1)

plt.rcParams["savefig.facecolor"] = "darkgrey"

#plt.show() #NOTE remove after tests

if not os.path.exists(os.path.join(site, "img")):

os.mkdir(os.path.join(site, "img"))

plt.savefig(os.path.join(site, "img", filename+".png"))

plt.clf() #clear in case of loop

def process_log(logPath, site, filename):

try:

data = read_json_log(logPath)

except IOError as e:

return

xs, ys = parse_json(data)

if len(xs) == 0: #there is no data

return

print "Processing {}\r".format(filename),

sys.stdout.flush()

construct_plot(xs, ys, site, filename)

if __name__ == "__main__":

site = args.site

year = args.year

months = [args.month.zfill(2)]

if args.all == True:

months = [str(m).zfill(2) for m in range(1,13)]

for month in months:

filename = site+"_"+year+"-"+month

logPath = site+"/"+filename+".txt"

process_log(logPath, site, filename)

print ""

Source: Plotter.py